General Theory of Information Transfer

P. Algoet: Universal Parsimonious Prequential Modeling Schemes for Stationary Processes with Densities

Let ![]() be a normalized reference measure on a standard Borel space

be a normalized reference measure on a standard Borel space ![]() and let

and let ![]() be a probability measure on the sequence space

be a probability measure on the sequence space

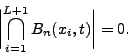

![]() such that

for all

such that

for all ![]() , the marginal distribution

, the marginal distribution

![]() of the segment

of the segment

![]() admits a density

admits a density

A prequential (sequential predictive) modeling scheme is a sequence of conditional

probability densities

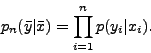

![]() . The compounded likelihood

. The compounded likelihood

![]() cannot

grow faster than exponentially with limiting rate

cannot

grow faster than exponentially with limiting rate

![]() :

:

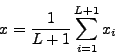

We construct a universal parsimonious prequential modeling scheme for stationary

processes with densities using the following ingredients.

1. The universal data compression algorithm of Lempel and Ziv is used to construct

universal prequential modeling schemes for quantized versions of the process ![]() .

.

2. A prequential modeling scheme for a quantized version can be lifted to

a prequential modeling scheme for the process itself according to the minimum

divergence principle.

3. Several modeling schemes for the process ![]() are combined into a mixture.

This is the essence of Bayesian modeling.

are combined into a mixture.

This is the essence of Bayesian modeling.

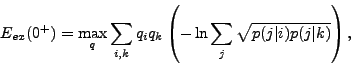

4. The information divergence rate

![]() of the process

of the process ![]() is equal

to the maximum information divergence rate of quantized versions of the process

(Pinsker's formula).

is equal

to the maximum information divergence rate of quantized versions of the process

(Pinsker's formula).

Parsimonious prequential modeling schemes provide the solution to the following problems.

1. Universal procedures for discrimination or hypothesis testing.

Suppose the null hypothesis ![]() is rejected in the event

is rejected in the event

![]() .

Then

.

Then ![]() will be rejected eventually almost surely whenever

will be rejected eventually almost surely whenever ![]() is stationary ergodic

with distribution

is stationary ergodic

with distribution ![]() satisfying

satisfying

![]() . The probability

. The probability ![]() that

that

![]() is incorrectly rejected when in fact

is incorrectly rejected when in fact ![]() is the true distribution of

is the true distribution of ![]() is bounded by

is bounded by ![]() .

.

2. Universal gambling schemes. Suppose a gambler bets on each outcome ![]() with knowledge of the past

with knowledge of the past ![]() according to the conditional probability density

according to the conditional probability density

![]() relative to the odds measure

relative to the odds measure ![]() . The gambler's capital

will be returned multiplied by the factor

. The gambler's capital

will be returned multiplied by the factor

![]() when the outcome

when the outcome

![]() is revealed. The gambler doesn't know the true process distribution

is revealed. The gambler doesn't know the true process distribution ![]() ,

but he learns from experience in such a way that his compounded capital

,

but he learns from experience in such a way that his compounded capital

![]() grows exponentially almost surely with the maximal rate

grows exponentially almost surely with the maximal rate

![]() that could

be asymptotically attained if he knew the infinite past

that could

be asymptotically attained if he knew the infinite past

![]() and hence

the process distribution

and hence

the process distribution ![]() to begin with.

to begin with.

3. Universal data compression. A parsimonious prequential modeling scheme for

![]() can be used to design a universal data compression algorithm for quantized

versions of

can be used to design a universal data compression algorithm for quantized

versions of ![]() . We can design uniquely decipherable variable-length binary

codes for quantized blocks of increasing length in such a way that the per-symbol

description length approaches the entropy rate of the quantized process.

. We can design uniquely decipherable variable-length binary

codes for quantized blocks of increasing length in such a way that the per-symbol

description length approaches the entropy rate of the quantized process.

4. Consistent estimation of

![]() .

Given a parsimonious prequential modeling scheme

.

Given a parsimonious prequential modeling scheme

![]() ,

define

,

define

I. Althöfer: On the Design of Multiple Choice Systems: Shortlisting in Candidate Sets

Definition (``Multiple Choice System for Decision Support''): Programs compute a clear handful of candidate solutions. Then a human has the final choice amongst these candidates.

We shortly call such systems ``Multiple Choice Systems''. In the field of electronic commerce Multiple Choice Systems are/were sometimes advertised under the names ``Recommender System'' and ``Recommendation System''.

We describe three different Multiple Choice Systems which were very successful in the game of chess.

``3-Hirn'' / ``Triple Brain'': Two independent programs make one proposal each.

``Double-Fritz with Boss'': Since 1995 the chess program ``Fritz'' has a 2-best-mode where it computes not only its best but also its second best candidate move. The human gets the choice amongst these top-2 candidates.

``Listen-3-Hirn'' / ``List Triple Brain'': Two independent chess programs are used which both have k-best modes. Program 1 proposes its k best candidate moves, whereas program 2 gives its top m candidates. (The numbers k and m are chosen appropriately, for instance k=m=3.)

In all three examples the human has the final choice amongst the computer proposals. However, he is not allowed to outvote the programs.

The speaker is an amateur chess player (rating = 1900 +- epsilon) and experimented with these Multiple Choice Systems in performance-oriented chess: with 3-Hirn from 1985 to 1996, Double-Fritz with Boss in 1996, List Triple Brain in 1997. The systems almost always performed 200 rating points above the performances of the single programs in it. The ``final'' success was a 5-3 match win of List Triple Brain over Germany's no. 1 player Arthur Yusupov (Elo 2645 in those days) in 1997. After this event top human players were no longer willing to play against these Multiple Choice Systems.

Claim: In a Multiple Choice System the human is not allowed to outvote the program. This often helps to improve the performance, especially in realtime applications.

Natural building blocks of a Multiple Choice System are programs that compute more than one candidate solution. In this context k-best algorithms are often not suited because they tend to give only micro mutations instead of true alternatives (master example: the shortest path problem). The task to generate true alternatives of ``good'' quality is typically non-trivial.

The talk will center around the problem of finding true alternatives in large candidate sets.

R. Apfelbach: Chemical communication in mammals: What we know and what we would like to know

Chemical regulation of cellular processes makes its appearance in some of the most primitive plant and animal species. Chemical regulatory agents include relative nonspecific molecules such as CO2, H+, O2 and Ca2+, and those, like cAMP, that are more complex and are produced specifically as regulators, or messengers. Chemical messengers operate at all levels of biological organization from subcellular to interorganismal. Some animal species, for example, utilize chemical messengers as means of communication between individuals of that species. In this case we call chemical messengers as pheromones.

In the talk we first will look at the sender of chemical signals. Topics to be covered include: Chemistry of pheromones, where are pheromones produced and how are they released; what kind of information do pheromones convey? The second aspect of the talk will concentrate on the receiving station. Where and how are chemical signals received? We will follow the chemical signals from the receptor to the brain. Topics to be covered include: the receiving structure (olfactory epithelium), the processing station (olfactory bulb) and olfactory coding.

The talk will concentrate on selected mammalien species. However, some differences and similarities between invertebrates and vertebrates will be indicated.

A. Apostolico: Pattern Discovery and the Algorithmics of Surprise

The problem of characterizing and detecting recurrent sequence patterns such as substrings or motifs and related associations or rules is variously pursued in order to compress data, unveil structure, infer succinct descriptions, extract and classify features, etc. In Molecular Biology such regularities have been implicated in various facets of biological function and structure. The discovery, particularly on a massive scale, of significant patterns and correlations thereof poses interesting methodological and algorithmic problems, and often exposes scenarios in which tables and descriptors grow faster and bigger than the phenomena they are meant to encapsulate. This talk reviews some results at the crossroads of statistics, pattern matching and combinatorics on words that enable us to control such paradoxes, and presents related constructions, implementations and empirical results.

A. Ashikhmin: Nonbinary Quantum Stabilizer Codes

We define and show how to construct nonbinary quantum stabilizer

codes. Our approach is based on nonbinary error bases. It

generalizes the relationship between selforthogonal codes over ![]() and binary quantum codes to one between selforthogonal codes over

and binary quantum codes to one between selforthogonal codes over

![]() and

and ![]() -ary quantum codes for any prime power

-ary quantum codes for any prime power ![]() .

.

R. Ahlswede, H. Aydinian, and L.H. Khachatrian: On Bohman's conjecture related to a sum packing problem of Erdos

Motivated by a sum packing problem of Erdos, Bohman discussed a

problem which seems to have an independent interest. Let ![]() be a

hyperplane in

be a

hyperplane in ![]() such that

such that

![]() .

The problem is to determine

.

The problem is to determine

V. B. Balakirsky: Hashing of Databases with the Use of Metric Properties of the Hamming Space

We describe hashing of databases as a problem of information and coding theory. It is shown that the triangle inequality for the Hamming distances between binary vectors can essentially decrease the computational efforts needed to search for a pattern in databases. Introduction of the Lee distance of the Hamming distances, leads to a new metric space where the triangle inequality is effectively used.

V.B. Balakirsky and A.J.H. Vinck: On the performance of permutation codes for multi-user communication

The permutation coding for multi-user communication schemes that originate from a Fast Frequency Hopping / Multiple Frequency Shift Keying modulation is investigated. Each sender is either passive or he sends some signal formed as a concatenation of ![]() elementary signals having

elementary signals having ![]() different specified frequencies. There is also a jammer who can introduce disturbances. A single disturbance is either sending of the signal containing all

different specified frequencies. There is also a jammer who can introduce disturbances. A single disturbance is either sending of the signal containing all ![]() frequencies at a certain time instant or sending of some elementary signal at all time instants. Each receiver receives a vector of

frequencies at a certain time instant or sending of some elementary signal at all time instants. Each receiver receives a vector of ![]() sets where the set at each time instant contains a fixed frequency if and only if corresponding elementary signal was sent either by some sender or by the jammer. The task of the receiver is unique decoding of the message of his sender.

sets where the set at each time instant contains a fixed frequency if and only if corresponding elementary signal was sent either by some sender or by the jammer. The task of the receiver is unique decoding of the message of his sender.

We present regular constructions for permutation codes for this scheme given the following parameters: the number of frequencies, the number of pairs (sender, receiver), the number of messages per sender, the maximum number of disturbances of the jammer.

A. Barg: Error exponents of expander codes under linear-time decoding

We analyze iterative decoding of codes constructed from bipartite graphs with good expansion properties. For code rates close to capacity of the binary symmetric channel we prove a new estimate that improves previously known results for expander codes and concatenated codes.

V. Blinovsky: New Approach to the Estimation of the probability of Decoding Error

We offer a new approach of deriving lower bounds

for the probability of the decoding error in the discrete

channel without memory for zero rate. This approach allows to

essentially simplify the process of obtaining the bound making

it natural and at the same time to improve the rest term of the

estimation. Consider the discrete channel without memory, which is

determined by the set of conditional probabilities

![]() , on the finite set

, on the finite set

![]() . The set

. The set ![]() generates the set of

condition probabilities

generates the set of

condition probabilities

The maximal probability of the decoding error ![]() is

defined by the relation

is

defined by the relation

In the work [1] the central result which was obtained by Shannon,

Gallager and Berlecamp was the proof of the following bound:

In present work we offer an approach which seems to us more

natural and which allows us to obtain the bound

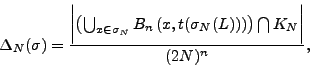

V. Blinovsky: Random sphere packing

We do the research of the probabilistic properties of random packing in Euclidean space.

For the locally finite set

![]() and cube

and cube ![]() with the center at

with the center at ![]() and edge

and edge ![]() define

define

![]() and let

and let

Next we consider a natural generalization of the previous

situation. We say that the locally finite subset

![]() is

is ![]() packing if for arbitrary

packing if for arbitrary ![]() points

points

![]() the following

relation is valid

the following

relation is valid

We calculate the variation of the random variable

![]() . We can say almost nothing about the

behavior of the asymptotic of the normalized

. We can say almost nothing about the

behavior of the asymptotic of the normalized ![]() as

as

![]() as it was done in the case of

as it was done in the case of ![]() .

.

H. Brighton: Modelling the evolution of linguistic structure

Would a thorough understanding of the Language Acquisition Device (LAD) tell us why language has the structure it does? This presentation will introduce work that suggests cultural dynamics, in conjunction with biological evolution, make the relationship between linguistic structure and the LAD non-trivial.

R. Ahlswede and N. Cai: Watermarking identification codes with related topics on common randomness (see [B4])

J.P. Allouche, M. Baake, J. Cassaigne, and D. Damanik: Palindrome complexity

One way to measure the degree of ``complication'' of an infinite word ![]() is to associate to it a function

is to associate to it a function ![]() called subword complexity.

This function counts the number of finite subwords (blocks of

consecutive letters) contained in

called subword complexity.

This function counts the number of finite subwords (blocks of

consecutive letters) contained in ![]() according to their length

according to their length ![]() .

We are interested here in a particular class of subwords, the

palindromes, words that remain unchanged when read from right to left.

By counting the number of palindromes that occur in

.

We are interested here in a particular class of subwords, the

palindromes, words that remain unchanged when read from right to left.

By counting the number of palindromes that occur in ![]() , one defines a

new function

, one defines a

new function ![]() , the palindrome complexity. This function has

some applications in theoretical physics.

, the palindrome complexity. This function has

some applications in theoretical physics.

We shall first present several simple examples that illustrate the

different possible behaviours of the function ![]() , and show that

this function conveys information about the infinite word that cannot

be deduced from

, and show that

this function conveys information about the infinite word that cannot

be deduced from ![]() alone. Then we shall establish an inequality

between the two functions, and show through other examples that this

inequality cannot be substantially improved.

alone. Then we shall establish an inequality

between the two functions, and show through other examples that this

inequality cannot be substantially improved.

F. Cicalese, L. Gargano, and U. Vaccaro: Optimal approximation of uniform distributions with a biased coin

Given a coin of bias ![]() we consider the problem of generating probability distributions which are the closest to the uniform one, using flips of the given coin. We provide a simple greedy algorithm that, having in input bits produced by a coin of bias

we consider the problem of generating probability distributions which are the closest to the uniform one, using flips of the given coin. We provide a simple greedy algorithm that, having in input bits produced by a coin of bias ![]() , produces in output a probability distribution of minimum distance from the uniform one, under several different notions of distance among probability distributions.

, produces in output a probability distribution of minimum distance from the uniform one, under several different notions of distance among probability distributions.

S. Csibi and E.C. van der Meulen: Properties of codes for identification via a multiple access channel under a word-length constraint (see also [B8])

The purpose of the present exposition is two-fold: (i) to consider a published and an as yet unpublished approach by the authors on suppressing drastically the false identification probability of huge finite length identification plus transmission codes, and (ii) to revisit published results also by the present authors on the possibilities of conveying codewords through a kind of multiple access erasure channel exhibiting, by its properties, particular flexibility. The considered suppression of the false identification probability might be viewed, in a sense, as an elementary way of forward error control designed, especially for the considered huge size identification codes, under a word length constraint. The considered kind of multiple access is controlled, both at the place of the single input and at all outputs, by the very same protocol sequence of closely least length. We rely in the present exposition essentially upon pioneering findings by Ahlswede and Dueck (1989a, 1989b), Han and Verdu (1992 and 1993) on the concatenation version of the two explicit code constructions proposed by Verdú and Wei (1993). For generating a cyclically permutable control sequence, and for giving an upper bound on the least length of such sequences we rely upon Nguyen, Györfi, Massey (1992). A lower bound on the said least length is obtained by extending a fundamental approach by Bassalygo and Pinsker (1983). The considered way of identification via an MA channel might be of interest for certain perspective remote alarm services. - Standard identification tasks are considered in the present exposition only. However, we still have got another interesting class of networking examples which might be formulated, perhaps, as ![]() -identification tasks. See Ahlswede (1997).

-identification tasks. See Ahlswede (1997).

L. Demetrius: Entropy in Thermodynamics and Evolution

The science of thermodynamics is concerned with understanding the properties of inanimate matter in so far as they are determined by changes in temperature. The Second Law asserts that in irreversible processes there is a uni-directional increase in thermodynamic entropy, a measure of the degree of uncertainty in the thermal energy state of a randomly chosen particle in the aggregate. The science of evolution is concerned with understanding the properties of populations of living matter in so far as they are regulated by changes in generation time. Directionality theory, a mathematical model of the evolutionary process, establishes that in populations subject to bounded growth constraints, there is a uni-directional increase in evolutionary entropy, a measure of the degree of uncertainty in the age of the immediate ancestor of a randomly chosen newborn. I will review the mathematical basis of directionality theory and analyse the relation between directionality theory and statistical thermodynamics. By exploiting an analytic relation between temperature and generation time, I will show that the directionality principle for evolutionary entropy is a non-equilibrium extension of the principle of a uni-directional increase of thermodynamic entropy. The analytic relation beween these directionality principles is consistent with the hypothesis of the equivalence of fundamental laws as one moves up the hierarchy, from aggregates of inanimate matter where the thermodynamic laws apply, to a population of replicating entities (molecules, cells higher organisms), where evolutionary principles prevail.

R. Ahlswede, L. Bäumer, and C. Deppe: Information theoretic models in language evolution (included in [B4])

The human language is used to store and transmit information. Therefore there is a significant interest in the mathematical models of language development. These models shall explain how natural selection can lead to the gradual emergence of human language. Among others Nowak et al. created a mathematical model, where the fitness of a language is introduced. For this model they showed if signals can be mistaken for each other, then the performance of such systems is limited. The performance can not be increased over a fixed threshold by adding more and more signals. Nevertheless the concatenation of signals or phonemes to words increases significantly the fitness of the language. The fitness of such a signalling-system depends on the number of signals and on the probabilities to transmit individual signals correctly. In the papers of Nowak et al. optimal configurations of signals in different metric spaces were investigated. Nowak conjectures that the fitness of a product-space is equal to the product of the fitnesses of the metric spaces. We analyse the Hamming model. In this model the direct consequence of Nowak's conjecture is that the fitness of the whole space equals the maximal fitness. We show that the fitness of Hamming codes asymptotically achieves this maximum.

These theoretical models of fitness of a language enable the investigations of classical information theoretical problems in this context. In particular this is true for feedback problems, transmission problems for multiway channels etc. In the feedback model we developed we show that feedback increases the fitness of a language.

A.G. D'yachkov and P.A. Vilenkin: Statistical Estimation of Average Distances for the Insertion-Deletion Metric

Using the Monte Carlo method and computer simulation,

we construct and calculate point estimators and

confidence intervals for average distance fractions in the

space of ![]() -ary

-ary ![]() -sequences with insertion -

deletion metric (ID metric) [1]. These unknown

fractions are cut-off points for hypothetical lower bounds

on the rate of codes for ID metric.

-sequences with insertion -

deletion metric (ID metric) [1]. These unknown

fractions are cut-off points for hypothetical lower bounds

on the rate of codes for ID metric.

S. Galatolo: Algoritmic information content, dynamical systems and weak chaos

By the use of the Algorithmic Information Content (otherwise called Kolmogorov -Chaitin complexity) of a string it is possible to define local invariants of dynamical systems. The local invariant measures the complexity of the orbits.

We will see some relations between orbit complexity and other measures of chaos (such as entropy, initial condition sensitivity and dimension). These relations between complexity, sensitivity and dimension give a non-trivial information even in the weakly chaotic (0-entropy) case.

E. Haroutunian and A. Ghazaryan: On the Shannon cipher system with a wiretapper guessing subject to distortion and reliability

We investigate the problem of wiretapper's guessing with respect to fidelity and reliability criteria in the Shannon cipher system.

Encrypted messages of a discrete memoryless source must be communicated by a public channel to a legitimate receiver. The key-vector independent of source messages is transmitted to encrypter and to decrypter by the special secure channel protected against wiretappers. The transmitter encodes the source message and key-vector and sends a cryptogram over a public channel to a legitimate receiver which based on the cryptogram and key-vector recovers the original message by the decryption function. The wiretapper that eavesdrops a public channel aims to decrypt the original source message in the framework of given distortion and reliability on the base of csyptogram, source statistic and encryption function. The security of the system is measured by the expected number of wiretapper's guesses. The problem is to determine the minimum (over all guessing lists) of the maximal (over all encryption functions) expected number of sequential wiretapper's guesses until the satisfactory message will be found.

The guessing problem was first considered by Massey (1994). This problem subject to fidelity criterion was studied by Arikan and Merhav (1998).

The investigated problem was proposed by Merhav and Arikan (1999) and is the

extension of the problem considered by them in 1999. The main extension is consideration of the distortion and reliability criteria and of the possibility to limit the number of wiretapper's guesses, that is we demand that for a given guessing list, distortion level ![]() and reliability

and reliability ![]() the probability that distortions between blocklength

the probability that distortions between blocklength ![]() source messages and each of first

source messages and each of first ![]() guessing vectors will be larger than

guessing vectors will be larger than ![]() , must not exceed

, must not exceed ![]() .

.

For given key rate ![]() , the minimum (over all guessing lists) of the maximal (over all encryption functions)

, the minimum (over all guessing lists) of the maximal (over all encryption functions) ![]() and expected number of required guesses, with respect to distortion and reliability criteria, are found.

and expected number of required guesses, with respect to distortion and reliability criteria, are found.

For the special case of binary source and Hamming distortion measure the expected number of wiretapper's guesses is calculated.

J. Gruska: Quantum Multipartite Entanglement

Understanding of the multipartite quantum entanglement, is currently one of the major problems in quantum information science. Progress in tis area is needed for both developing theory of quantum information science and for understanding of computation and communication power of quantum information processing.

In the talk we first demonstrate complexity of the problem and its importance for various areas of quantum information science. Then we discuss a variety of basic general approaches how to deal with the problem - approaches that are of broader importance for the field. Finally, we concentrate on some major results in this area and various open problems.

K. Gyarmati: On a pseudorandom property of binary sequences

C. Mauduit and A. Sárközy proposed the use of well-distribution measure and correlation measure as measures of pseudorandomness of finite binary sequences. In this paper we will introduce and study a further measure of pseudorandomness: the symmetry measure. First we will give upper and lower bounds for the symmetry measure. We will also show that there exists a sequence for which each of the well-distribution, correlation and symmetry measures are small. Finally we will compare these measures of pseudorandomness.

M. Haroutunian: Bounds for rate-reliability function of multi-user channels

Very important properties of each communication channel are characterized by the reliability function ![]() , which was introduced by Shannon 1959, as the optimal coefficient of the exponential decrease

, which was introduced by Shannon 1959, as the optimal coefficient of the exponential decrease

A big number of works is devoted to studying of this function for various communication systems. Along with achievements in this part of Shannon theory a lot of problems remain unsolved. Because of principal difficulty of finding the reliability function for whole range of rates ![]() , this problem is completely solved only in rather particular cases. The situation is typical when (for example, for the binary symmetric channel) obtained upper and lower bounds for function

, this problem is completely solved only in rather particular cases. The situation is typical when (for example, for the binary symmetric channel) obtained upper and lower bounds for function ![]() coincide only for small rates.

coincide only for small rates.

E. Haroutunian proposed and developed an approach, which consists in studying the function ![]() , converse to

, converse to ![]() (Haroutunian 1967, 1969, 1982). This is not only a mechanical permutation of roles of independent and dependent variables, since the investigation of optimal rates of codes, ensuring when

(Haroutunian 1967, 1969, 1982). This is not only a mechanical permutation of roles of independent and dependent variables, since the investigation of optimal rates of codes, ensuring when ![]() increases the error probability exponential decrease with given exponent (reliability)

increases the error probability exponential decrease with given exponent (reliability) ![]() , may be sometimes more expedient than the study of the function

, may be sometimes more expedient than the study of the function ![]() .

The definition of the function

.

The definition of the function ![]() is in natural conformity with the Shannon's notions of the channel capacity

is in natural conformity with the Shannon's notions of the channel capacity ![]() . So by analogy with the definition of the capacity this characteristic of the channel may be called

. So by analogy with the definition of the capacity this characteristic of the channel may be called ![]() -capacity.

On the other side the name rate-reliability function is also logical.

-capacity.

On the other side the name rate-reliability function is also logical.

Concerning to bounds construction methods, it is found that the Shannon's random coding method (Shannon 1948) of proving the existence of codes with definite properties, can be applied with the same success for studying of the rate-reliability function. For the converse coding theorem type upper bounds deduction (so called sphere packing bounds) E. Haroutunian 1967, 1982 proposed a simple combinatorial method, which was later applied to various complicated systems.

The two-way channel was first investigated by Shannon 1961. The channel has two terminals and the transmission in one direction interferes with the transmission in the opposite direction. The sources of two terminals are independent. The encoding at each terminal depends on both the message to be transmitted and the sequence of symbols received at that terminal. Similarly the decoding at each terminal depends on the sequence of symbols received and sent at that terminal.

The restricted version of TWC is considered, where the transmitted sequence from each terminal depends only on the message but does not depend on the received sequence at that terminal.

The RTWC also was first investigated by Shannon 1961, who obtaind the capacity region ![]() of the RTWC. The capacity region of the general TWC is not found up to now. Important results relative to various models of two-way channels were obtained by Ahlswede 1971, 1973, 1974, Dueck 1978, 1979, Han 1984, Sato 1977 and others. In particular, Dueck has demonstrated that the capacity regions for average and maximal error probabilities do not coincide.

of the RTWC. The capacity region of the general TWC is not found up to now. Important results relative to various models of two-way channels were obtained by Ahlswede 1971, 1973, 1974, Dueck 1978, 1979, Han 1984, Sato 1977 and others. In particular, Dueck has demonstrated that the capacity regions for average and maximal error probabilities do not coincide.

The outer and inner bounds for ![]() are constructed. The limit of

are constructed. The limit of

![]() , when

, when ![]() ,

, ![]() , coincides with the capacity region of RTWC found by Shannon.

, coincides with the capacity region of RTWC found by Shannon.

Shannon 1961 considered also another version of the TWC, where the transmission of information from one sender to its corresponding receiver may interfere with the transmission of information from other sender to its receiver, which was later called interference channel.

The general interference channel differs from the TWC in two respects; the sender at each terminal do not observe the outputs at that terminal and there is no side information at the receivers.

Ahlswede 1973, 1974 obtained bounds for capacity region of GIFC. The papers of Carleial 1975, 1978, 1983 are also devoted to the investigation of IFC, definite results are obtained in series of other works, but the capacity region is found only in particular cases.

The random coding bound of ![]() -capacity region in the case of average error probability for GIFC is obtained.

-capacity region in the case of average error probability for GIFC is obtained.

Broadcast channels were first studied by Cover 1972. The capacity region of deterministic BC was found by Pinsker 1978 and Marton 1979. The capacity region of the BC with one deterministic component was defined independently by Marton 1979 and Gelfand and Pinsker 1980. The capacity region of assymmetric BC was found by Körner and Marton 1977. In many words several models of BC have been considered, but the capacity region of BC in the situation, when two private and one common messages must be transmitted, is still not found. Willems 1990 proved that the capacity regions of BC for maximal and average error probabilities are the same.

It is proved that the region

![]() is an inner estimate for

is an inner estimate for ![]() -capacity region of BC.

-capacity region of BC.

When ![]() ,

, ![]() , using time sharing arguments we obtain the inner bound for the capacity region

, using time sharing arguments we obtain the inner bound for the capacity region ![]() of BC, obtained by Marton 1979.

of BC, obtained by Marton 1979.

There exist various configurations of the multiple-access channel. The most general model of the MAC: the MAC with correlated encoder inputs, was first studied by Slepian and Wolf 1973. Three independent sources create messages to be transmitted by two encoders. One of the sources is connected with both encoders and each of the othe two is connected with only one of the encoders.

Dueck 1978 has shown that in general the maximal error capacity region of MAC is smaller than the corresponding average error capacity region. Determination of the maximal error capacity region of the MAC in various communication situations is still an open problem.

The inner and outer bounds for ![]() -capacity region of MAC with correlated encoders are constructed. When

-capacity region of MAC with correlated encoders are constructed. When ![]() , we obtain the inner and outer estimates for the channel capacity region, the expressions of which are similar but differ by the probability distributions

, we obtain the inner and outer estimates for the channel capacity region, the expressions of which are similar but differ by the probability distributions ![]() and

and ![]() . The inner bound coincides with the capacity region.

. The inner bound coincides with the capacity region.

As special cases the regular MAC, Asymmetric MAC, MAC with cribbing encoders and channel with two senders and two receivers are considered.

P. Harremoes and F. Topsoe: Information theoretic aspects of Poisson's law (see [B47])

T. F. Havel: Quantum Information Science and Technology

Current progress in integrated circuit fabrication, and nanotechnology more generally, promises to bring engineering to the quantum realm within the next two decades. To fully realize the potential of these technologies it will be necessary to develop the theoretical and experimental tools needed for the coherent control of quantum systems. The ultimate goal is a quantum computer, which will be able to perform conditional logic operations over coherent superpositions of states, thereby attaining a degree of parallelism which grows exponentially with the size of the problem. For only a few problems, however, have quantum algorithms been discovered which use interference effects to efficiently extract a single unambiguous result from the final superposition.

It has recently been found that macroscopic ensembles of quantum systems can use additional, albeit purely classical parallelism to accelerate the search for any desired result by a potentially large constant factor. Because incoherent averaging effects provide resilience against common forms of errors in quantum operations, such an ensemble quantum computer is also significantly easier to realize experimentally. In fact, these averaging effects can even be used to select the signals from a single quantum state out of a nearly random ensemble at thermal equilibrium. By these means it has now been possible to demonstrate all the basic principles of quantum computing by conventional liquid-state NMR spectroscopy.

There are, nonetheless, a number of practical and theoretical limits on the size of the computations which can be performed by liquid-state NMR. A more immediately practical goal may be to use NMR to simulate the dynamics of other quantum systems that are more difficult to study experimentally. This idea of simulating one quantum system by another originated with Richard Feynman, although it is implicit in older and well-established average Hamiltonian and Liouvillian techniques for designing NMR pulse-sequences. As an example of this approach, the results of experiments which simulate the first four levels of a quantum harmonic oscillator will be presented.

A.S. Holevo: On entanglement-assisted capacity of quantum channel

A simplified proof of the recent theorem of Bennett, Shor, Smolin and Thaplyal concerning entanglement-assisted classical capacity of quantum channel is presented. A basic component of the proof is a continuity property of entropy, which is established by using heavily the notion of strong typicality from classical information theory. Relation between entanglement-assisted and unassisted classical capacities of a quantum channel is discussed.

J.R. Hurford: The neural basis of predicate-argument structure

Neural correlates exist for a basic component of logical formulae, PREDICATE![]() .

.

Vision and audition research in primates and humans shows two independent neural pathways; one locates objects in body-centered space, the other attributes properties, such as colour, to objects. In vision these are the dorsal and ventral pathways. In audition, similarly separable `where' and `what' pathways exist. PREDICATE![]() is a schematic representation of the brain's integration of the two processes of delivery by the senses of the location of an arbitrary referent object, mapped in parietal cortex, and analysis of the properties of the referent by perceptual subsystems.

is a schematic representation of the brain's integration of the two processes of delivery by the senses of the location of an arbitrary referent object, mapped in parietal cortex, and analysis of the properties of the referent by perceptual subsystems.

The brain computes actions using a few `deictic' variables pointing to objects. Parallels exist between such non-linguistic variables and linguistic deictic devices. Indexicality and reference have linguistic and non-linguistic (e.g. visual) versions, sharing the concept of attention. The individual variables of logical formulae are interpreted as corresponding to these mental variables. In computing action, the deictic variables are linked with `semantic' information about the objects, corresponding to logical predicates.

Mental scene-descriptions are necessary for practical tasks of primates, and pre-exist language phylogenetically. The type of scene-descriptions used by non-human primates would be reused for more complex cognitive, ultimately linguistic, purposes. Thr provision by the brain's sensory/perceptual systems of about four variables for temporary assignment to objects, and the separate processes of perceptual categorization of the objects so identified, constitute a preadaptive platform on which an early system for the linguistic description of scenes developed.

H. Jürgensen: Synchronizing codes

In modern communication, synchronization errors arising from various physical defects in the communication links are quite common. When information-resend is not a problem, error-detection and a protocol for handling errors can take care of this. When resend is not feasible - as in high-volume traffic or deep-space communication - coding techniques that allow for the detection and correction of synchronization errors need to be employed. Error models for the type of channels used in modern communication and requirements for codes dealing with these error models need to be developed. We shall outline some of the current technical issues and present some techniques for coping with synchronization errors.

G.O.H. Katona: A coding problem for pairs of sets

Let ![]() be a finite set of

be a finite set of ![]() elements,

elements, ![]() an integer.

Suppose that

an integer.

Suppose that ![]() and

and ![]() are pairs of disjoint

are pairs of disjoint ![]() -element subsets of

-element subsets of ![]() (that is,

(that is,

![]() ,

,

![]() ,

,

![]() ). Define the distance of these pairs by

). Define the distance of these pairs by

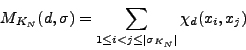

![]() . One can see that this is really a distance on the space of such pairs.

. One can see that this is really a distance on the space of such pairs. ![]() denotes the maximum number of pairs

denotes the maximum number of pairs ![]() with pairwise difference at least

with pairwise difference at least ![]() . The motivation comes from database theory. The lower and upper estimates use Hamiltonian type theorems, traditional code constructions and Rödl's method for packing.

. The motivation comes from database theory. The lower and upper estimates use Hamiltonian type theorems, traditional code constructions and Rödl's method for packing.

H.K. Kim and V. Lebedev: On optimal superimposed codes

A ![]() cover-free family is a family of subsets of a finite set such that any intersection of

cover-free family is a family of subsets of a finite set such that any intersection of ![]() members of the family is not covered by a union of

members of the family is not covered by a union of ![]() others. A binary

others. A binary ![]() superimposed code is the incidence matrix of such a family. Such families also arise in cryptography as the concept of key distribution patterns. In the present paper, we develop methods of constructing such families and prove that some of them are optimal.

superimposed code is the incidence matrix of such a family. Such families also arise in cryptography as the concept of key distribution patterns. In the present paper, we develop methods of constructing such families and prove that some of them are optimal.

K. Kobayashi: When the entropy function appears in the residue

The generalized Dirichlet series of harmonic sum takes sometimes a meromorphic function with the residue that contains the entropy function. We will give a typical case when the entropy function appears in the residue by investigating the Bernoulli splitting process induced with biased coin. Furthermore, we can easily extend the idea of binary process to K-ary splitting process by biased dice.

S. Konstantinidis: General models of discrete channels and the properties of error-detection and error-correction

1 Introduction

Communication of information presupposes the existence of a channel (the information medium) and a language whose words can be used to encode the information that needs to be transfered via the channel. Depending on the characteristics of the channel and the objectives of the application, the communication language satisfies certain properties such as error-correction, error-detection, low redundancy, and low encoding and decoding complexity. The classical theory of error-correcting codes [14], [15] addresses such properties. Usually, it considers probabilistic models of channels involving substitution errors and (nonprobabilistic) methods for constructing codes with error handling capabilities where error situations with low enough probability are not considered. By ignoring improbable error situations, it is also possible to consider nonprobabilistic channel models. This approach is taken, for instance, in [13],[4],[16],[1],[6],[7],[8] where channels might allow syncronization errors possibly in addition to substitution errors. It is taken implicitly in other works as well such as [3]. In this abstract we describe general models of discrete channels and define the properties of error-detection, error-correction, and unique decodability with respect to such channels. With this framework in mind, the talk might include results related to the following topics:

2 General Models of Discrete Channels

Informally, we use the term channel for a discrete mathematical

model of a physical medium capable of carrying or storing

elements

that can be represented using words of a language. The medium

could be anything like a computer memory, a wire, a DNA test tube,

a computer typesetter, etc. In each case, the error types

permitted

and the way they are combined might be different and in some

cases not

well understood. Let ![]() be an alphabet (e.g.,

be an alphabet (e.g., ![]() = the

binary alphabet,

= the

binary alphabet,

![]() = the DNA alphabet). Then

= the DNA alphabet). Then

![]() is the set of all words over

is the set of all words over ![]() . A language

(over

. A language

(over ![]() )

is any subset of

)

is any subset of ![]() . A binary relation (over

. A binary relation (over ![]() )

is any subset of

)

is any subset of ![]() . The domain

. The domain

![]() of

a binary relation

of

a binary relation ![]() is the set

is the set

![]() for some word

for some word ![]() .

.

We proceed with more specific classes of channels.

Rational channels include channels that are rather unreasonable in practice. They offer, however, the following advantages:

The class of SID channels allows one to model various ways

of combining substitution, insertion, and deletion errors

(SID errors) [6], [8].

Such channels were first considered

explicitly in [13]. Here we present a subset of the full

class of SID channels. An error type ![]() is an

element of the set

is an

element of the set

In [4], the contributing authors define a channel

model,

based on substitutions

(a term for generalized homomorhisms - see below), that

allows

one to model various error situations. Properties of unique

decodability and synchronizability for this channel model

are studied in [16],[1]

and [2], for instance. Let ![]() be the source alphabet

and let

be the source alphabet

and let ![]() be a substitution function from

be a substitution function from ![]() into the

finite

subsets of

into the

finite

subsets of ![]() ; that is, for each

; that is, for each ![]() ,

, ![]() is a set

of words over

is a set

of words over ![]() that represents the possible forms of

that represents the possible forms of ![]() when it is received via the channel. The fact that

when it is received via the channel. The fact that ![]() is a

substitution implies that

is a

substitution implies that

![]() ,

for every source symbols

,

for every source symbols ![]() and

and ![]() . This channel model

fits into the framework of Definition 1 - in this form the

channels

are called homophonic [7]. In

fact, it is a special case of the rational channel model.

. This channel model

fits into the framework of Definition 1 - in this form the

channels

are called homophonic [7]. In

fact, it is a special case of the rational channel model.

3 Error-Detection and Error-Correction

Let ![]() be a channel. A language

be a channel. A language ![]() is

error-detecting

for

is

error-detecting

for ![]() , [11],

if

, [11],

if

![]() implies

implies ![]() for all words

for all words

![]() , where

, where

![]() and

and ![]() is the empty word.

Assuming that only words from

is the empty word.

Assuming that only words from ![]() are sent into the channel, if

are sent into the channel, if ![]() is retrieved from

the channel and

is retrieved from

the channel and ![]() is in

is in ![]() then

then ![]() must be

correct; that is, equal to the word that was sent into

the channel. On the other hand, if the word retrieved from

the channel is not in

must be

correct; that is, equal to the word that was sent into

the channel. On the other hand, if the word retrieved from

the channel is not in ![]() then an error is detected.

The use of

then an error is detected.

The use of ![]() as opposed to

as opposed to ![]() ensures that

ensures that

![]() cannot be received from a word in

cannot be received from a word in

![]() and that no word in

and that no word in

![]() can be

received from

can be

received from ![]() .

Of particular interest is the

case where the language

.

Of particular interest is the

case where the language ![]() is coded; that is,

is coded; that is, ![]() for some (uniquely decodable) code

for some (uniquely decodable) code ![]() .

In this case, it is possible to define the concept of

error-detection

with finite delay [11], [12].

.

In this case, it is possible to define the concept of

error-detection

with finite delay [11], [12].

A language ![]() is error-correcting for

the channel

is error-correcting for

the channel ![]() , [10], if

, [10], if

![]() implies

that

implies

that ![]() for all words

for all words ![]() and

and

![]() ,

where

,

where

![]() .

Assuming that only words from

.

Assuming that only words from ![]() are sent into the channel, if

are sent into the channel, if ![]() is retrieved from the channel

then there is exactly one word from

is retrieved from the channel

then there is exactly one word from ![]() that has

resulted in

that has

resulted in ![]() . Therefore, even if

. Therefore, even if ![]() has been received with

errors then, in principle, one can find the word

has been received with

errors then, in principle, one can find the word

![]() with

with

![]() , correcting thus the

errors in

, correcting thus the

errors in ![]() . In the definition, the use of

. In the definition, the use of ![]() ensures

that the empty word and a nonempty word of

ensures

that the empty word and a nonempty word of ![]() can never result

in the same output through the channel

can never result

in the same output through the channel ![]() .

It should be clear that if a language is error-correcting

for

.

It should be clear that if a language is error-correcting

for ![]() then it is also error-detecting for

then it is also error-detecting for ![]() ,

assuming that the language is included in the domain

of the channel

,

assuming that the language is included in the domain

of the channel ![]() .

A code

.

A code ![]() is uniquely decodable for the channel

is uniquely decodable for the channel

![]() (or

(or ![]() -correcting [7]) if the language

-correcting [7]) if the language

![]() is error-correcting for

is error-correcting for ![]() .

.

E.V. Konstantinova: Applications of information theory in chemical graph theory

Information theory has been used in various branches of science. During recent years it is applied extensively in chemical graph theory of describing chemical structures and for proving good correlations between physico-chemical and structural properties. In this talk we survey the old and new results concerning information theory applications to characterizing molecular structures. The Shannon formula is used for constructing information topological indices. These indices are investigated with respect to their basic characteristics that are the correlating ability with a molecular property and the discrimination power.

V. Levenshtein: Combinatorial and Probabilistic Problems of Sequence Reconstruction

New problems of reconstruction of an unknown sequence of a fixed length with the help of the minimum number of its patterns which are distorted by errors of a given type are considered. These problems are completely solved for combinatorial channels with substitutions, permutations, deletions, and insertions of symbols. Asymptotically tight solution is also found for a discrete memoryless channel and for the continuous channel with discrete time and additive Gaussian noise.

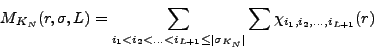

T. Berger and V. Levenshtein: Coding Theory and Two-Stage Testing Algorithms

The problem of finding optimal linear codes and of constructing optimal

two-stage tests are connected through the need to find an optimum parity

check matrix and an optimum test design matrix, respectively. The

efficiency of two stage testing of n items which are active or not

according to a Bernoulli distribution with parameter ![]() is

characterized by the minimum expected number

is

characterized by the minimum expected number ![]() of disjunctive tests,

where the minimum is taken over all test matrices used in the first stage.

We use coding theory methods to obtain new upper and lower bounds which

determine the asymptotic behavior of

of disjunctive tests,

where the minimum is taken over all test matrices used in the first stage.

We use coding theory methods to obtain new upper and lower bounds which

determine the asymptotic behavior of ![]() up to a constant factor as

up to a constant factor as

![]() tends to infinity and

tends to infinity and ![]() tends to

tends to ![]() .

.

M. Blaeser, A. Jakoby, M. Liskiewicz, and B. Siebert: Private Computations on ![]() -Connected Communication Networks

-Connected Communication Networks

We study the role of connectivity of communication network

in private computations under information theoretic settings.

In a talk a general technique will be shown for a simulation

of private protocols on any communication network by private

protocols on an arbitrary ![]() -connected network which use for

this simulation only a small number of additional random bits.

Furthermore, it will be proved that some non-trivial functions can

be computed privately even if the used network is

-connected network which use for

this simulation only a small number of additional random bits.

Furthermore, it will be proved that some non-trivial functions can

be computed privately even if the used network is ![]() -connected but

not

-connected but

not ![]() -connected and finally, a sharp lower bound will be given for

the number of random bits needed to compute some concrete functions

on all

-connected and finally, a sharp lower bound will be given for

the number of random bits needed to compute some concrete functions

on all ![]() -connected networks.

-connected networks.

K. Mainzer: Information Dynamics in Nature and Society. An Interdisciplinary Approach

In the age of information society, concepts of information are an interdisciplinary challenge of mathematics and computer science, natural and social sciences, and last but not least humanities. The reason is that the evolution of nature and mankind can be considered as an evolution of information systems. On the background of the theories on information, computability, and nonlinear dynamics, we suggest a unified concept of information, in order to study the flow of information in complex dynamical systems from atomic, molecular and cellular systems to brains and populations. During the past few years, natural evolution has become a blue-print for the technical development of information systems from quantum and molecular computing to bio- and neurocomputing. Mankind is growing together with the information and communication systems of global networking. Their information flow has remarkable analogies with the nonlinear dynamics of traffic flows. Net- and information chaos needs new methods of information retrieval. In the age of globalization, our understanding and managing of information flows in complex systems is one of the most urgent challenges towards a sustainable future of information society.

M.B. Malyutov: Non-Parametric Search for Significant Inputs of Unknown System

Suppose we can measure the output of a system whose input is a

binary ![]() -tuple

-tuple

![]() . It is known that the output

(which may have a continuous distribution) depends only on an

. It is known that the output

(which may have a continuous distribution) depends only on an

![]() -tuple

-tuple ![]() of the inputs labeled by a subset

of the inputs labeled by a subset ![]() of

of

![]() with

with ![]() .

. ![]() is equally likely, a priori, to

be any

is equally likely, a priori, to

be any ![]() -subset of

-subset of ![]() inputs. Fixing inputs in

inputs. Fixing inputs in ![]() trials

(design) we observe outputs which are conditionally independent

given design. The choice of analysis of outputs and design to

minimize the number

trials

(design) we observe outputs which are conditionally independent

given design. The choice of analysis of outputs and design to

minimize the number ![]() of trials for finding the set

of trials for finding the set ![]() under a

prescribed upper bound

under a

prescribed upper bound ![]() for error probability is studied. This

problem turns out to be closely related to that of studying

optimal transmission rate for multiple access channels (MAC) with

or without feedback. Asymptotic bounds for

for error probability is studied. This

problem turns out to be closely related to that of studying

optimal transmission rate for multiple access channels (MAC) with

or without feedback. Asymptotic bounds for ![]() under small

under small ![]() and/or large

and/or large ![]() are found. The cases of fixed and increasing

are found. The cases of fixed and increasing ![]() ,

sequential and non-sequential design are studied. A universal

asymptotically optimal nonparametric analysis of outputs

applicable without any knowledge of the system based on

maximization of Shannon Information between some classes of

input-output empirical distributions is studied.

,

sequential and non-sequential design are studied. A universal

asymptotically optimal nonparametric analysis of outputs

applicable without any knowledge of the system based on

maximization of Shannon Information between some classes of

input-output empirical distributions is studied.

James L. Massey: Information Transfer with Feedback

Whereas information theory has been very successful in characterizing the technical aspects of information transfer over channels without feedback, it has been much less successful in dealing with channels with feedback. Shannon himself in 1973 seemed to suggest that feedback communications needed rethinking by information theorists. Some reasons for this failure of information theory will be proposed. Some possible approaches to theory of feedback communications based on directed information and causally conditioned directed information will be discussed.

C. Mauduit: Measures of pseudorandomness for finite sequences

I will present a survey on recent results concerning pseudorandomness of finite binary sequences. In a series of papers, A. Sárközy and myself introduced new measures of pseudorandomness connected to the regularity of the distribution relative to arithmetic progressions and the correlations. We analysed and compared several constructions including the Legendre symbol, the Thue-Morse sequence, the Rudin-Shapiro sequence, the Champernowne sequence, the Liouville function (jointly with J. Cassaigne, S. Ferenczi and J. Rivat), and a further construction due to P. Erdos related to a diophantine approximation problem. We also study the expectation and the minima of these measures and the connection between correlations of different order.

M. Loewe, F. Merkl, and Silke Rolles: Moderate deviations for longest increasing subsequences in random permutations

The distribution of the lengths of longest increasing subsequences in random permutations has attracted much attention especially in the last five years. In 1999, Baik, Deift, and Johansson determined the (nonstandard) central limit behaviour of these random lengths. They use deep methods from complex analysis and integrable systems, especially noncommutative Riemann Hilbert theory. The large deviation behaviour of the same random variables was determined before by Seppaelaeinen, Deuschel, and Zeitouni, using more classical large deviation techniques. In the talk, some recent results on the moderate deviations of the length of longest increasing subsequences are presented. This concerns the domain between the central limit regime and the large deviation regime. The proof is based on a Gaussian saddle point approximation around the stationary points of the transition function matrix elements of a certain noncommutative Riemann Hilbert problem of rank 2.

V.N. Koshelev and E.C. van der Meulen: On the duality between successive refinement of information by source coding with fidelity constraints and efficient multi-level channel coding under cost constraints

Shannon (1959) pointed out a certain duality between (one-step) source coding with a fidelity criterion on the one hand, and (one-step) coding for a memoryless input-constrained channel on the other. Mathematically, this duality is reflected by two main informationtheoretical quantities known as the rate-distortion function and the capacity-cost function, respectively. Shannon (1959) proved coding theorems showing that the rate-distortion function R(D) can be achieved for average distortion D in the source coding problem, and that the capacity-cost function C(t) can be achieved if the average cost per channel input is at most t in the channel coding problem.

Koshelev (1980) introduced the concept of hierarchical source coding, and provided a sufficient condition for so-called successive refinement (or source divisibility). Equitz and Cover (1991) proved the necessity of this condition. This condition is that the individual solutions of the rate-distortion problems can be written as a Markov chain.

In this presentation we consider multi-level channel coding subject to a sequence of input constraints, and formulate the notion of efficient successive two-level channel coding. We prove sufficient conditions for efficient successive channel coding subject to two cost constraints (channel divisibility). These conditions also involve a Markov-relationship, namely between the individual solutions of the respective capacity-cost problems.

F. Meyer auf der Heide: Data Management in Networks

This talk surveys strategies for distributing and accessing shared objects in large parallel and distributed systems. Examples for such objects are, e.g., global variables in a parallel program, pages or cache lines in a virtual shared memory system, or shared files in a distributed system, for example in a distributed data server. I focus on strategies for distributing, accessing, and (consistently) updating such objects, which are provably efficient with respect to various cost measures. First I will give some insight into methods to minimize contention at the memory modules, based on redundant hashing strategies. This is of interest for, e.g., the design of real time storage networks, as we develop within our PReSto-project in Paderborn. The main focus of this talk is on presenting strategies that are tailored to situations where the bandwidth of the network (rather than the contention at the mamory modules) is the bottleneck, so the aim is to organise the shared objects in such a way that congestion is minimized. First I present schemes that are efficient w.r.t. information about read- and write-frequencies. The main part of the talk will deal with online, dynamic, redundant data management strategies that have good competitive ratio, i.e., that are efficient compared to an optimal dynamic offline strategy that is constructed using full knowledge about the dynamic access pattern. Especially the case of memory restrictions in the processors will be discussed.

A. Miklosi: Some general insights of animal communication from studying interspecific communication

There are two extrem cases in animal (including human) communication. At one extreme animal communication can be viewed as a behavioral regulatory mechanism between conspecifics. Such communication can be witnessed in agressive contest or takes place during courtship. In this case, communicative signals are part of a closed behaviour systems where the ``information content'' of such signalling is limited. The other extreme of forms of communication is a means to modify the mental content of another individual by using various forms of referential signalling. These systems are characterised of their openness and learning plays also some part in both production and comprehension of communicative signals. Assuming that brains represent mental models of the environment the main question is how signals that are being the product of one mental model can influence the representations in the other. Within a species it can be assumed that mental models are correspondingly similar to each other and therefore signals will have the same effect in the case of each individuals. If a signal plays the same role in the mental models of both communication individual, we might talk of 100considering the case of interspecific communication correspondence can be much lower. In the dog's mental model of the environment the call ``Come here!' represent a very different component compared of that it plays in the mental modell of a human hearing the same utterance. There are two ways of increasing correspondence in interspecific communication. If there is time avaiable genetic changes could contribute to changes in the communicative systems in both species that increase correspondance. In the other, not exclusive case individuals of both species should engage in learning to increase correspondence. In turn this assumes also some flexibility in both comprehension and production of communicative signals. Species with more relaxed motor patterns and less selective recognition systems have an advatages here. In my overview of this topic I will illustrate this by using the naturally occuring communicative behaviour of honeyeater birds and humans, differences and similarities of human-dog and human-ape communication.

F. Cicalese and D. Mundici: Learning, and the art of fault-tolerant guesswork

Fix two positive integers ![]() and

and ![]() . The smallest integer

. The smallest integer

![]() such that

such that

![]() is known as the Berlekamp sphere-packing bound, and is denoted

is known as the Berlekamp sphere-packing bound, and is denoted ![]() .

In binary search it is impossible

to find an element

.

In binary search it is impossible

to find an element

![]() using less than

using less than ![]() tests, up to

tests, up to ![]() of which may

be faulty.

On the other hand, using (a minimum of)

adaptiveness, for most

of which may

be faulty.

On the other hand, using (a minimum of)

adaptiveness, for most ![]() and

and ![]() such

such ![]() can be found using

exactly

can be found using

exactly ![]() tests.

Whether the same optimality result holds when no adaptiveness is

allowed is a basic problem for

tests.

Whether the same optimality result holds when no adaptiveness is

allowed is a basic problem for ![]() -error correcting codes, for which

only fragmentary, usually negative, answers are known.

The lower bound

-error correcting codes, for which

only fragmentary, usually negative, answers are known.

The lower bound ![]() for

for

![]() -fault tolerant binary search

turns out to be an upper bound for

the number of wrong guesses in several learning and online

prediction models. In most cases, the bound is tight.

-fault tolerant binary search

turns out to be an upper bound for

the number of wrong guesses in several learning and online

prediction models. In most cases, the bound is tight.

Irene Pepperberg: Lessons from Cognitive Ethology: Models for an Intelligent Learning/Communication System

Computers may be 'smart' in terms of brute processing power, but their abilities to learn are limited to what can easily be programmed. Computers presently are analogous to living systems trained in conditioned stimulus-response paradigms. Thus, a computer can solve new problems only if they are very similar to those it has already been programmed to solve. Computers cannot yet form new abstract representations, manipulate these representations, and integrate disparate knowledge (e.g., linguistic, contextual, emotional) to solve novel problems in ways managed by every normal young child. Even the Grey parrots I study, with brains the size of a walnut, succeed on such tasks. Such success likely arises because a parrot, like a young child, has repertoire of desires and purposes that cause it to form and test ideas about the world and how it can deal with the world; these ideas, unlike conditioned stimulus responses, can amount to representations of cognitive processing. I suggest that by deepening our understanding of the processes whereby nonhumans advance from conditioned responses to representation-based learning, we will begin to uncover rules that can be adapted to nonliving computational systems.

V. Prelov: Epsilon-entropy of ellipsoids in a Hamming space

The asymptotic behavior of the epsilon-entropy of ellipsoids in the Hamming space is investigated as the dimension of the space grows.

R. Reischuk: Algorithmic Aspects of distributions, errors and information

This talk gives an overview on algorithmic issues concerning probability distributions and information transfer that have to be taken into account for efficient realizations. From a computational complexity perspective we introduce issues like Algorithmic Learning, Data Mining, Communication Complexity and Interactive Proof systems.

Computationally hard problems, for which there is little hope for an eficient solution by standard deterministic algorithms, can be attacked by heuristics or in a more analytical way using parallelism, randomness, approximation techniques, and by considering the average-case behaviour.

We concentrate on average-case analysis discussing appropriate average-case measures. Since there exists a non-computable universal distribution that makes the average-case complexity as bad as the worst-case one has to think about what are reasonable or typical distributions for a computational problem and how one could measure the complexity of distributions themselves. These questions are illustrated for the sorting problem, for graph problems when the random graph model is used, and for the satisfiability of Boolean formulas. Further issues like reductions between distributions have to be dealt with that lead to notions like polynomial dominance and equivalence between distributions. As an example, we discuss the delay in Boolean circuits as a suitable such average-case measure.

Finally, we present a generalisation of the information transmission problem that models discrete problems in a situation where the assumption of fault-free input data is no longer realistic, for example in fields like Molecular Biology. Even then one would like to solve optimization problems with a similar efficiency as in the case without errors. Our goal is to identify cases where such a hope may be realistic, and then to find appropriate algorithmic methods.

R. Reischuk: Computational complexity: from the worst to the average case

We give an overview on the analysis of algorithms and machines with respect to their average-case behaviour. For the worst-case there is a well established framework to measure the efficiency of algorithms that yields a robust classification of the complexity of algorithmic problems. With respect to the every case, things are more subtle.

We discuss suitable average-case measures for different computational models that do not destroy reduction properties and polynomial time equivalence. For nonuniform distributions the algorithmic complexity of the distribution itself turns out to be of importance as well.

We illustrate this approach by considering the delay of Boolean circuits. For certain basic functions one obtaines an exponential speedup in the average case compared to the worst case.

R. Reischuk: Algorithmic learning of formal languages

Algorithmic Learning deals with the following problem. For a given learning domain (for example, the set of all binary vectors of some fixed length) one has to learn a subset called a concept. The unknown concept is chosen from a given concept class, a subset of the powerset of the learning domain. The learner gets a sequence of examples from the concept. After each example he has to provide a hypothesis what the concept might be. One likes to minimize the number of wrong guesses and the total amount of work till the learning procedure has converged to a correct hypothesis. Obviously, the effort depends on the concept to be learned and the concept class, but also on the sequence of examples given to the learner.

Several versions of this problem have been studied. Inductive Inference considers learning in the limit, where it is assumed that the sequence of examples is chosen by an adversary, but eventually this sequence has to contain all members of the concept. In Valiant's PAC-model, (probably approximately correct) the examples are drawn according to an unknown distribution on the learning domain. Now it is only required to compute an approximation of the concept which should be close to the true concept with high probability according to the underlying distribution.

The talk discusses a new model called stochastic finite learning introduced by Zeugmann and the author recently. We study the average case complexity of learning for natural classes of distributions and illustrate the problem of learning monomials and pattern languages. Very efficient algorithms can be obtained in the 1-variable case implying that worst case bounds obtained for limit learning and PAC learning based on the VC dimension are too pessimistic.